BACKUP AND ARCHIVE ORCHESTRATION

Growing data volumes, the proliferation of server virtualization, the need to leverage private and public cloud, and the increased need for high availability are presenting new challenges to modernize legacy backup and archive solutions. Explore our white papers to learn how you can modernize your environment using best practices we’ve honed over nearly two decades of experience in this space.

WHITE PAPER: GET THE FREEDOM OF HIGH-PERFORMANCE BACKUP

In this white paper, you’ll learn how to take a strategic approach to orchestrating your backup and archive environment to reduce storage costs and processing overhead. Designed as an enterprise-class solution, FalconStor StorSafe VTL can achieve single node aggregate backup speeds of 40TB/hour, enabling you to solve the single biggest issue in backup: meeting the backup window.

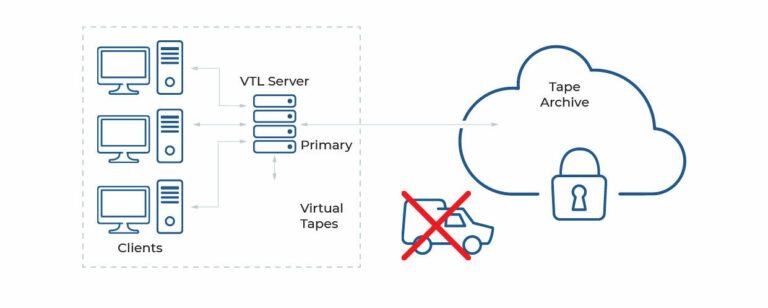

You’ll also learn how to leverage cloud object storage for long-term data retention and recovery, eliminating the need to physically move tapes offsite for storage. This reduces costs and, in remote and branch offices, eliminates the need for non-IT employees to intervene.

And with heterogeneous support for major operating systems such as Microsoft Windows, Unix, and Linux, as well as certification with backup software applications, you can protect existing investments and prevent rip-and-replace scenarios.

Resources

Certification Matrix

The FalconStor Certification Matrix outlines all of the hardware and software certified to work with FalconStor products. This matrix is constantly being updated, so please check back frequently for updates.

StorSafe® VTL with StorSight® Product Collateral

Customer Success Story

Service Express offers MSPs services to a broad range of companies across Europe and North America.

VTL Best Practices Guide

This document is intended for new VTL users who have a good understanding of backup applications and a basic understanding of virtual tape libraries. It is assumed that the system has already been architected and deployed in the environment.